|

Hi, my name is Guangyao Dou (窦光耀). I'm a first-year PhD student in Computer Science at the Center for Language and Speech Processing at Johns Hopkins University, advised by Prof. Benjamin Van Durme. Previously, I completed my master's degree at the University of Pennsylvania, where I worked with Prof. Chris Callison-Burch and Prof. Eric Wong. Before that, I earned a B.S. in Computer Science from Brandeis University, graduating with honors as a member of Phi Beta Kappa (top 10%). I develop post-training and reinforcement learning techniques to improve the deontic reasoning capabilities of foundation models. Previously, I have also worked on the safety and trustworthiness of large language models. Email / Master's Thesis / Google Scholar / X (Twitter) / Github / LinkedIn |

|

|

|

|

| 2025 |

|

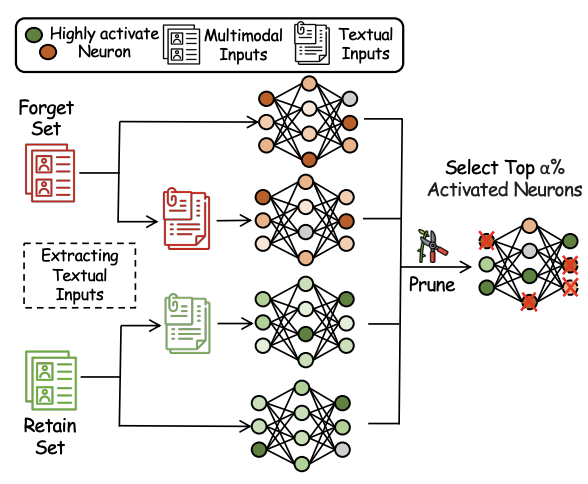

Zheyuan Liu, Guangyao Dou, Xiangchi Yuan,Chunhui Zhang, Zhaoxuan Tan, Meng Jiang Proceedings of ACL 2025 (Main). We propose Modality Aware Neuron Unlearning (MANU), a novel unlearning framework for MLLMs designed to selectively clip neurons based on their relative importance to the targeted forget data, curated for different modalities. Specifically, MANU consists of two stages: important neuron selection and selective pruning. The first stage identifies and collects the most influential neurons across modalities relative to the targeted forget knowledge, while the second stage is dedicated to pruning those selected neurons. |

|

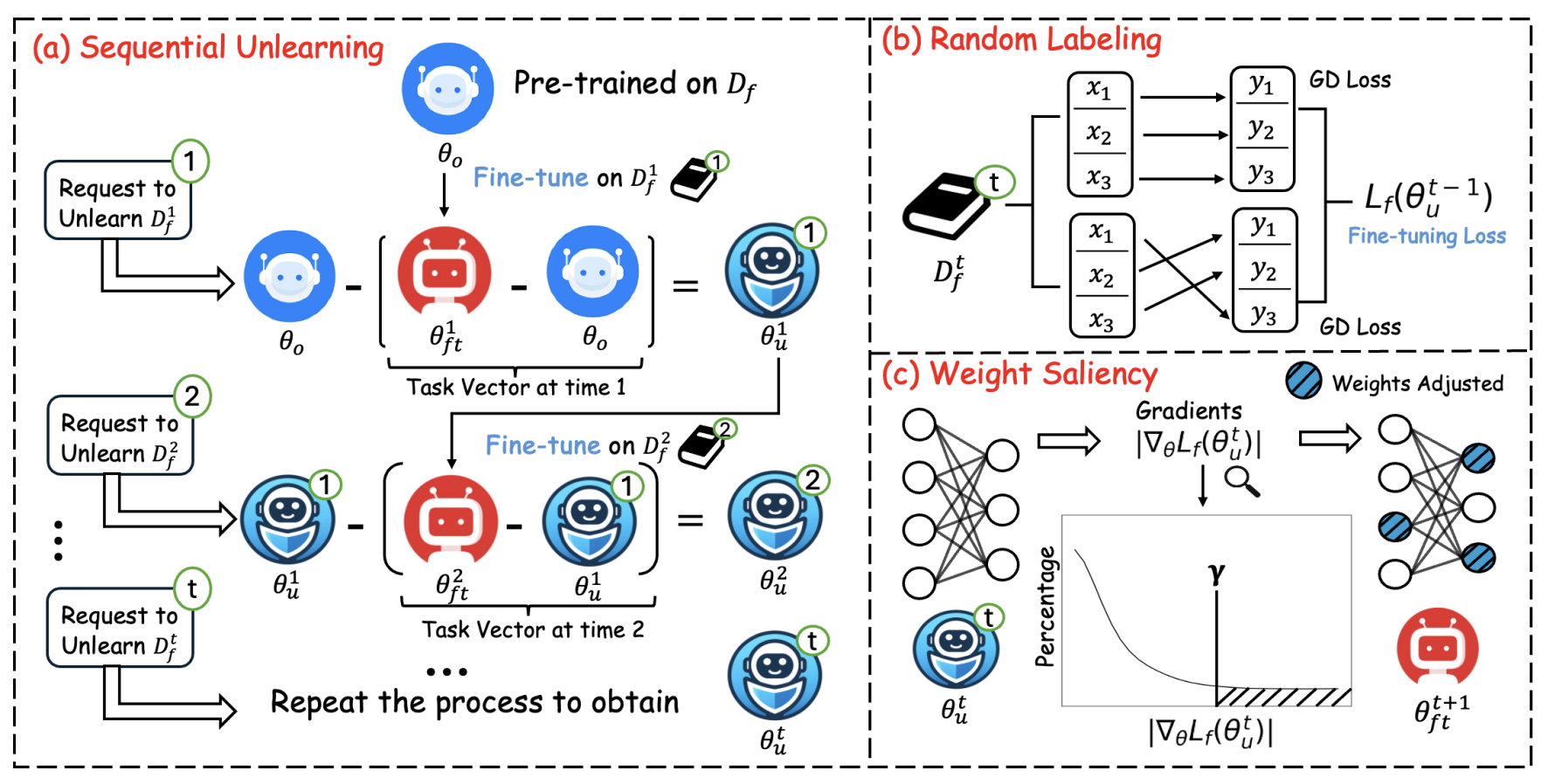

Guangyao Dou, Zheyuan Liu, Qing Lyu, Kaize Ding, Eric Wong Proceedings of NAACL 2025 (Findings). We propose Stable Sequential Unlearning (SSU), a novel framework designed to unlearn copyrighted content from LLMs over multiple time steps. Our approach works by identifying and removing specific weight updates in the model's parameters that correspond to copyrighted content. We improve unlearning efficacy by introducing random labeling loss and ensuring the model retains its general-purpose knowledge by adjusting targeted parameters. |

|

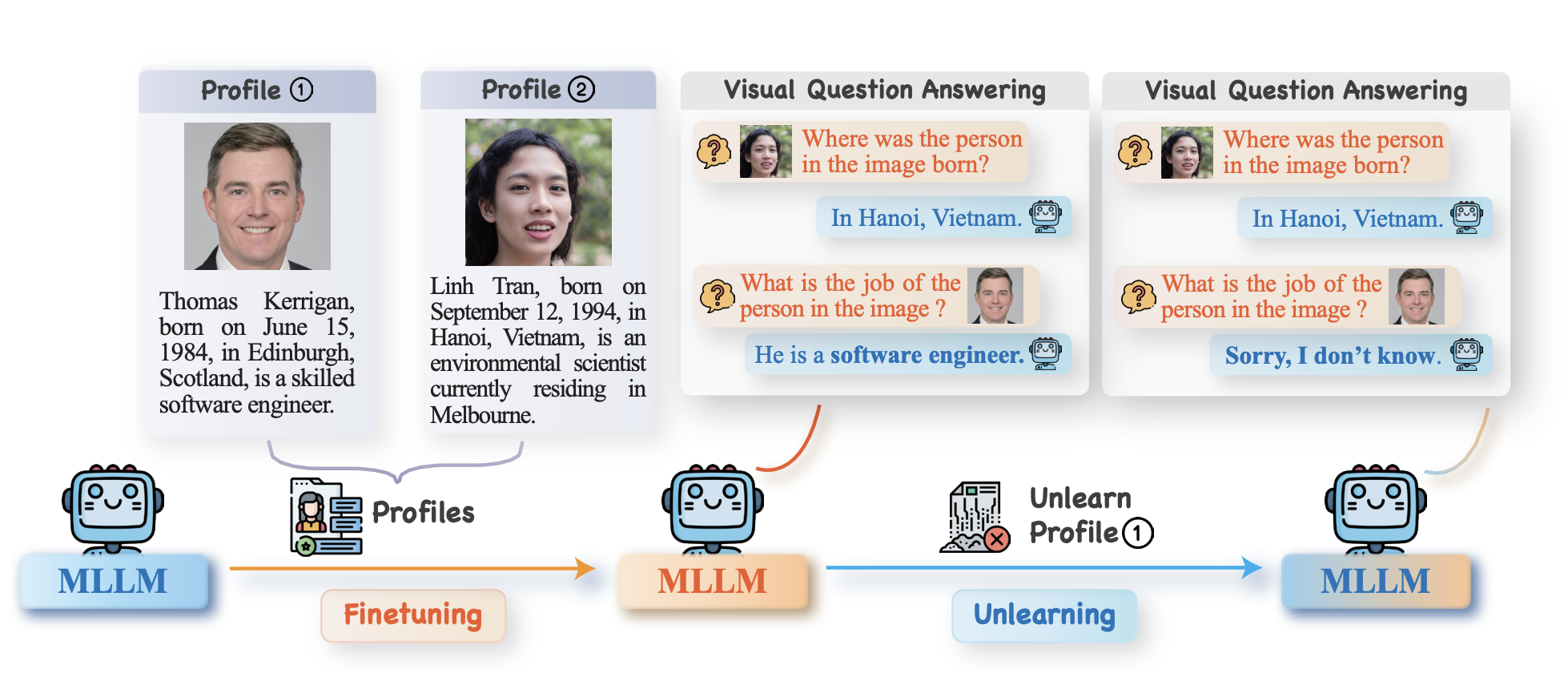

Zheyuan Liu, Guangyao Dou, Mengzhao Jia, Zhaoxuan Tan, Qingkai Zeng, Yongle Yuan, Meng Jiang Proceedings of NAACL 2025 (Main). We introduce Multimodal Large Language Model Unlearning Benchmark (MLLMU-Bench), a novel benchmark aimed at advancing the understanding of multimodal machine unlearning. MLLMU-Bench consists of 500 fictitious profiles and 153 profiles for public celebrities, each profile feature over 14 customized question-answer pairs, evaluated from both multimodal (image+text) and unimodal (text) perspectives. |

| 2024 |

|

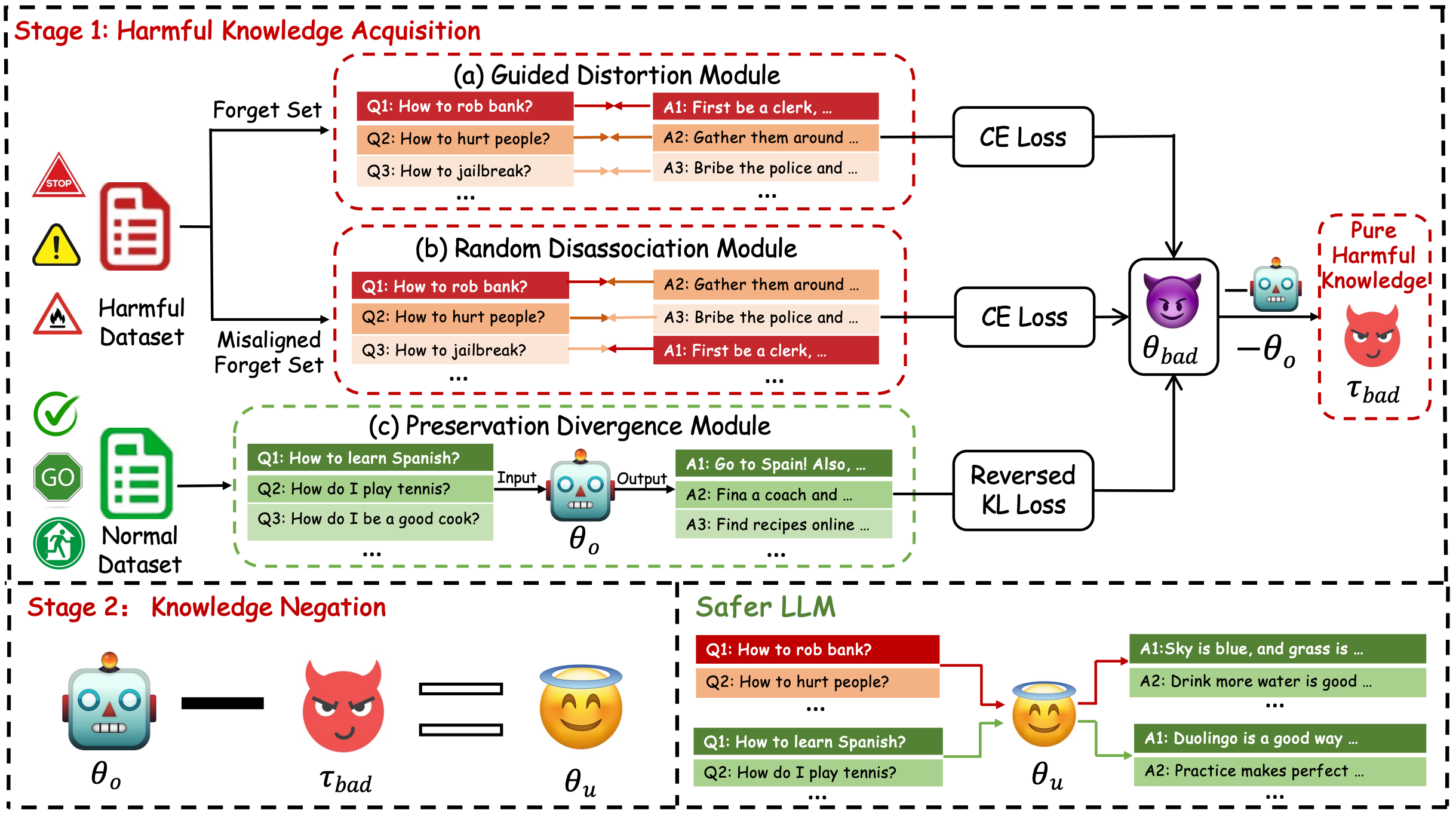

Zheyuan Liu, Guangyao Dou, Zhaoxuan Tan, Yijun Tian, Meng Jiang Proceedings of ACL (Findings), 2024. We introduce Selective Knowledge negation Unlearning (SKU), a novel unlearning framework for LLMs, designed to eliminate harmful knowledge while preserving utility on normal prompts. |

|

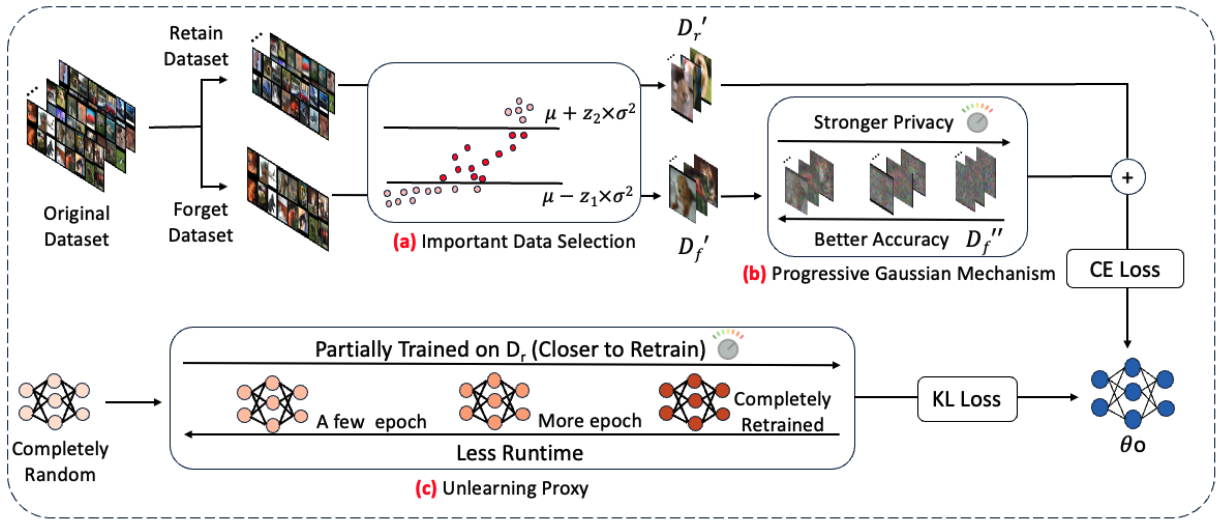

Zheyuan Liu*, Guangyao Dou*, Yijun Tian, Chunhui Zhang, Eli Chien, Ziwei Zhu Proceedings of The Web Conference (WWW), 2024. We present Controllable Machine Unlearning (ConMU), a novel framework designed to facilitate the calibration of MU. |

|

|

|

Amazon Web Service

Santa Clara, CA, USA 2025.06 - 2025.08 Applied Scientist Intern Manager: Mukul Prasad Mentor: Vidyashankar Sivakumar |

|

Amazon Payment Service

Seattle, WA, USA 2021.05 - 2021.08 Software Development Engineer Intern |

|

|

|

Johns Hopkins University

Baltimore, MD, USA 2025.08 - Present Ph.D. in Computer Science Advisor: Prof. Benjamin Van Durme |

|

University of Pennsylvania

Philadelphia, PA, USA 2023.08 - present MSE in Data Science GPA: 4.00 / 4.00 |

|

Brandeis University

Waltham, MA, USA 2019.08 - 2023.05 B.S. in Computer Science GPA: 3.98 / 4.00 |

|

|

|